Some unusual but wonderful thinking about LLMs.

Some links about ebooks and tutorials.

This passage is to log miscellaneous tips.

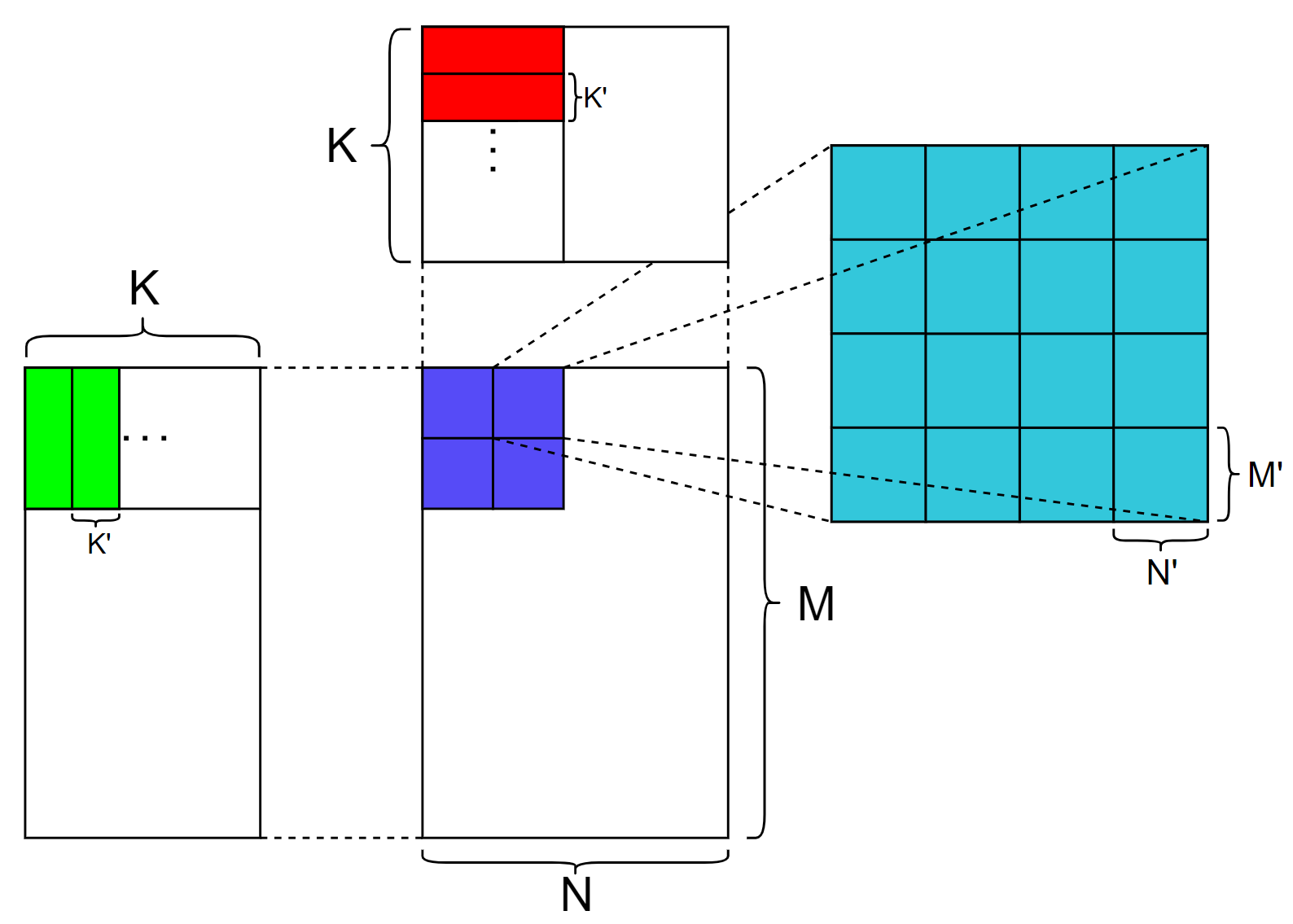

一步步优化GEMM系列,每次引入一个优化概念并对比性能变化

Cutlass use abstract layout to express the mapping rules from logic index to physical index.

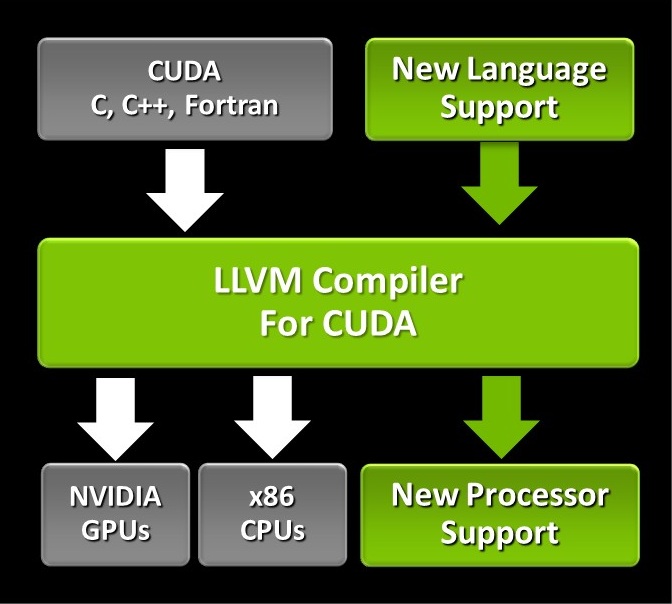

NVIDIA’s CUDA Compiler (NVCC) is based on the widely used LLVM open source compiler infrastructure. Developers can create or extend programming languages with support for GPU acceleration using the NVIDIA Compiler SDK.

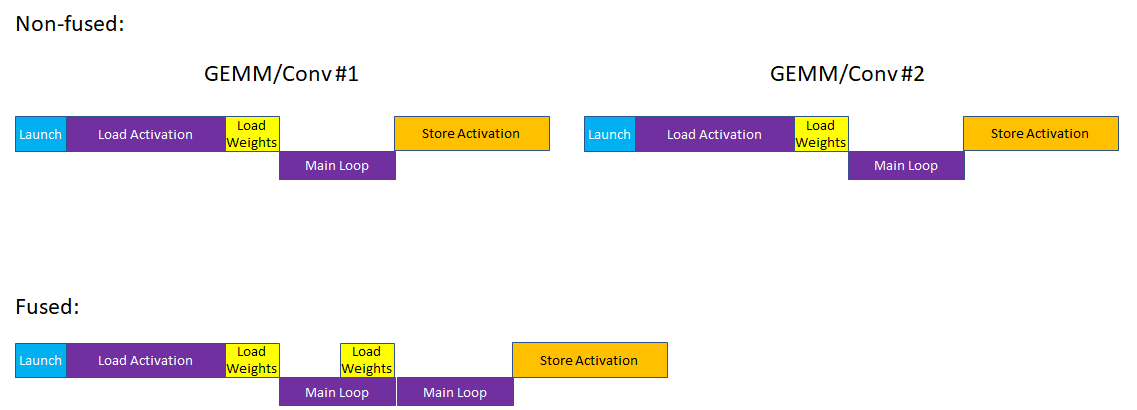

Cutlass examples gives us many examples to learn cutlass. At this time, 13_two_tensor_op_fusion is introduced.

Warp-level GEMMs may be implemented either by TensorCores issuing mma.sync or wmma instructions, or by thread-level matrix computations issued to CUDA cores. Wmma is an API in CUDA C++ for using TensorCores and if you want to use TensorCores by mma.sync you must use ptx by asm.

I always wonder why cutlass provides many kinds of implementions of GEMM instead of just only one. In my opinion, in different situations the best implementions of GEMM differs. So that is what differs cutlass from cublas. You can make your own custiomlized implemention of GEMM to provide the best performance.

中午在微博上看到广州下冰雹了,但是自己却没有遇见,今天下午刚到超算,听见外面有几声巨大的雷响,就发现外面在下冰雹,于是想出去感受下被冰雹砸中的感觉,刚到楼下就转为雨点了,这冰雹持续时间也太短了吧。记得上一次遇见冰雹还是在高中时候快高考在教室中模考,当时还特意去窗户边看了下。

In cutlass 3.0, it introduces a new library, Cute, to describe and manipulate tensors of threads and data.

learn cutlass is a series of tutorials to learn cutlass by reading its examples or source code

CUTLASS is a header-only template library. After reading that, you will be lost in templates.

This web is building by Hexo and Icarus.