learn-cutlass-4

Cutlass examples gives us many examples to learn cutlass. At this time, 13_two_tensor_op_fusion is introduced.

What is “two tensor op fusion”?

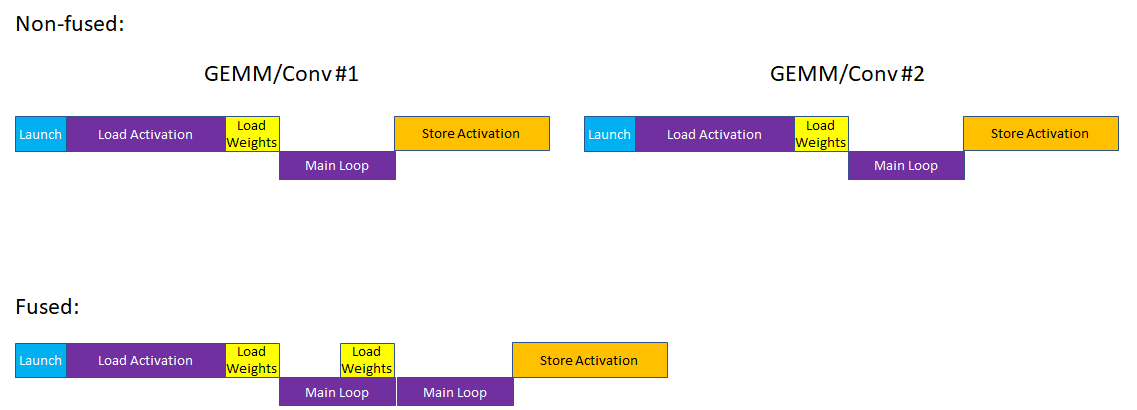

Fusing two back to back GEMMs/Convolutions into one kernel.

Why fusing?

To avoid intermediate results round trip to memory.

How fusing?

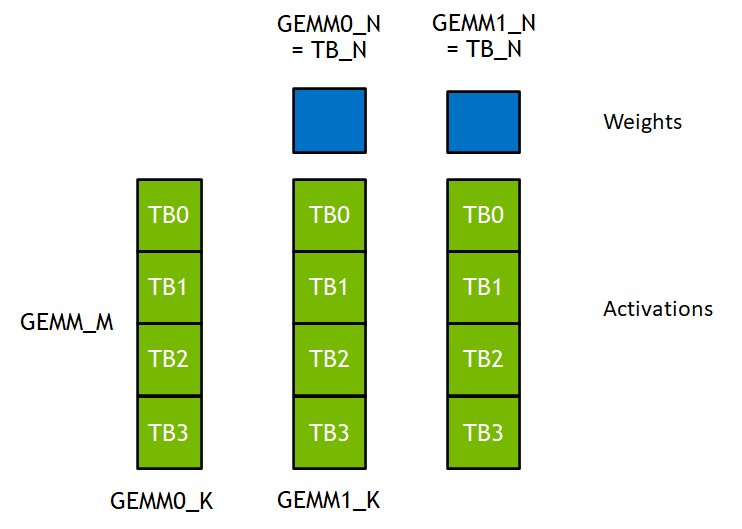

Cutlass uses threadblockTile and WarpTile to respresent the shape of final result matrix which is calculated by a threadblock or warp respectively.

- First, if we want to fuse two kernel of GEMM , the number of threadblocks of two kernels must be the same.

- Second, to use the intermediate result the dimension M of two GEMM must be the same.

Otherwisely, the input of second GEMM's threadblock may lie at other threadblock.Similarly, the dimension N of GEMM and the dimension N of threadblock must be the same.

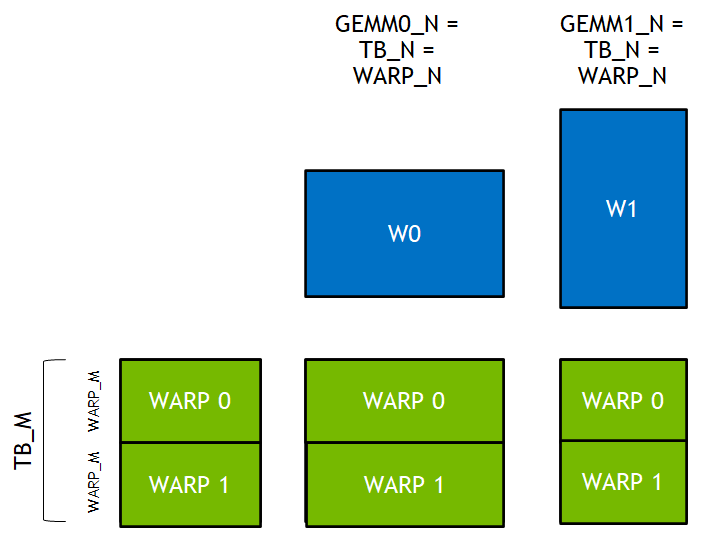

thread_block_tile_N = problem_N - Third, the dimension N of threadblock and the dimension N of warp must be the same when using register,

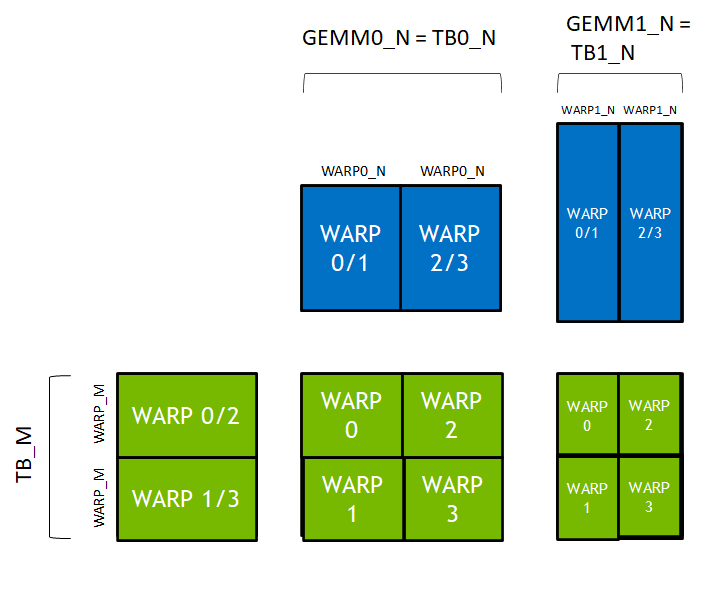

which can be relaxed when using share memory because different warps in a threadblock can exchange data with each other.

warp_tile_N = thread_block_tile_N

relaxed constraints

learn-cutlass-4